Obviously the web was invented to do a completely different job than host web applications. When the web was invented applications were enough of a struggle to create for the local machine, let alone using a distributed architecture. The web delivered hypertext, not applications, and for the job it was originally designed for it was, and still is, a very good system. But to host a component-based distributed architecture it’s like trying to hammer a nail with a screwdriver. We clearly need either to start again or augment what we have.

Starting again is clearly an attractive option but, despite the fact that there have been a number of attempts at creating an “application-based” web, nothing seems to have caught on sufficiently to have displaced the idea that it is better to continue to try to expand the capabilities of the existing HTML-based web. You might be tempted to see browser plug-ins like Flash or Silverlight as new architectures, but they too simply extend what we have. To see what the extensions are necessary let’s take a look at what makes HTML and HTTP a problem when it comes to implementing applications.

States

The main problem that any web application developer has to face is instituting a “stateful” interaction. The HTML/HTTP-based web is inherently stateless and, apart from the browser’s history, there is no connection between pages in the sense that they are served in any order to any client. The need to implement a stateful transaction is a surprisingly wide issue. If you are implementing a shopping trolley, a site membership list, or want to save a document on the client machine then you have a problem of preserving state. The reason why these seemingly different problems are a matter of restoring a system state is simply that when the user loads a web page that should use these resources, there has to be some way of identifying the client and making the connection between them and the appropriate resource – be it a shopping trolley, membership or document. In this sense “state” is all about the way identity and resources are tied together. Of course we all know ways of achieving the desired result, the trouble is that we are all so familiar with them that we sometimes fail to see how strange they are.The first thing to deal with is the issue of where the resources should be stored. In nearly all cases the correct location is the server, as this is the only choice that allows the user to roam to different client locations and still have access to the most up-to-date version of the resource – shopping trolley, membership or document. Notice that even if you do store all user data on the server, you most probably still need to store an identifier on the client machine and hence have access to the local filing system. This problem was supposed to be solved by the invention of the ill-named “cookie”. This allows a very small file containing a restricted range of data to be stored on the client machine for the purpose of identification and state management. The only problem with the cookie is its name, as many users regard accepting a “cookie” as being akin to taking sweeties from a stranger so they disable the facility. The name even prompts some users to wage a war against cookies using utilities that remove them from their machine, so defeating any attempt at maintaining a client link to resources stored on the server. If only the cookie had been called a “security blanket”, or something equally less threatening. Even so there are ways of persuading the user to accept a cookie, and server-side storage and state management isn’t that difficult.

Where this simple idea, that all client storage should be on the server, starts to go wrong is when the data is too sensitive or too large to make off-site storage feasible. Yes, you can create a web-based image editing program, but users will only be happy with it if they restrict their work to fairly low-resolution images. The file size problem is artificial in the sense that increasing the speed of the network connection makes it go away, but the security issue isn’t so easily dismissed. A user will only work with a web-based application that stores sensitive data on the server if it is provably secure in all senses. Currently there is no clear way to do this. So despite the obvious advantages of storing all resources on the server it is still necessary to consider distributed architectures in which the storage is on the client.

The need to store resources on the client is the reason that you encounter questions such as “how do I work with the local file system in JavaScript?”. The answer is always: You can’t. JavaScript doesn’t even have any file handing commands. You can add them on to a JScript program by loading the VBScript FSO object, but this doesn’t work in a wide enough range of environments. To say that you can’t create documents on the client using standard JavaScript isn’t strictly correct as there are devious ways around the problem. However they are all disastrously inefficient, involving multiple round trips of the entire document – upload existing file, download to browser, allow user to work on data, upload changes, download final version to client. This many round trips makes the file size and security problems worse not better, and the user has to be involved in each upload/download cycle and hence complete automation is impossible.

Why bother with the sandbox?

Now the main question makes itself clear – why can’t we just work with the local file system? The answer is equally obvious – security. This time security means keeping the client safe from malware that could use the local file system to store dangerous data or could simply copy sensitive data to another location. But wait – simply forbidding access to the local filing system because it “might be dangerous” is a very blunt weapon in the fight against malware. You could claim that it does us legitimate application architects as much damage as the malware writers! You could also claim that it doesn’t really stop anyone from inventing exploits given sufficient time. At best it simply makes writing malware a little more difficult.While it is true that security is essential, the whole notion of web pages running in a “sandbox” that keeps it away from any local resources is crazy. We accepted it because in the early days of the web there seemed to be no reason for a script or any active component of a website to have access to local resources. Now the restriction to a sandbox is crippling and one of the main reasons that web applications are hard. After all, ask yourself how reasonable it would be to protect the user from desktop malware by sandboxing every desktop application. Doesn’t work, does it?

An open world

If for a moment you imagine a world in which the sandbox has gone (is no more) then you can see at once that the web application is at no disadvantage to a desktop application. Indeed a web application is just a desktop application that runs in a browser window and has the advantage of being able to communicate with a server. Indeed the sharp distinction between web applications that run in a browser and desktop applications that don’t is reduced to just this difference – the browser is the operating system for the web application. You can’t even make the distinction that a desktop application is locally installed and a web application is mostly downloaded. Given full access to the local machine a web application can install as much of itself as is advantageous. For example, the use of JavaScript Widget libraries to extend the typical web page user interface to be the equal of a desktop application is much more efficient if the widget libraries are installed on the client machine and have access to all of the machine’s resources.So throw out the sandbox and everything just works! Well, no, of course it doesn’t. We still have the problem of protecting the user against malware. It is a no-brainer that the way to do this is to use code signing, and both Microsoft and Mozilla support the use of certificates to sign code – but each does it in completely different ways. In Mozilla’s case the technology is called “object signing”, and can include JavaScript and Java-derived objects. Once signed and verified these objects can be granted extra privileges that allow them to work partly outside of the sandbox. However the user is still explicitly asked if it is OK for the page to access the local resource, and this becomes a little irritating as the question is repeated for every instance. At the script level the trust is activated at a function level, and any additional facilities are available only to code within that function and any functions it might call. Working with signed code isn’t easy, and there are also issues with the way the system is implemented, in Firefox for example.

Microsoft uses a technology called “Authenticode”, and while this works in a similar way you, an only sign ActiveX controls. What this means is that, at the moment Mozilla is closer to what we need to create web-based applications that can break out of the sandbox model without lowering the security level too far.

Ajax out of the sandbox

You must have wondered when the Ajax word would appear. The Ajax philosophy makes it even more imperative that the next generation of browsers implement a coherent and standardised security model. An Ajax-based web page maintains its temporary state by simply not allowing the user to navigate to another page – the page is the state. All changes are made by downloading new data to the page and it’s all orchestrated by client-side code. The server is relegated to the job it was always intended to perform – serving up that new data. The client does most of the work and as such it needs access to the all of local machine’s resources. The sandbox approach to security simply has to go.Dr. Mike James’ programming career has spanned many languages, starting with Fortran. The author of Foundations of Programming, he has always been interested in the latest developments and the synergy between different languages.

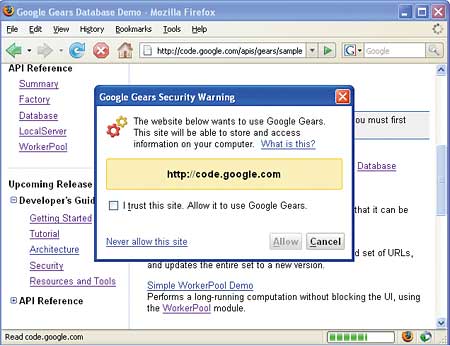

Is it OK to use Gears? Google Gears – a sandbox solution?Google Gears might or might not be a solution to the sandbox problem. It’s a clever idea that deserves to be better known. Gears is usually described as a system to allow off-line access to services that were originally intended to work on-line – however this undersells the idea. What it does is to allow limited and controlled access to the local storage, albeit in a sophisticated way. Functionally it works as a browser add-on, but all that this does is to permit access to a controlled storage area. All of the facilities of Gears are accessed via an object-oriented Javascript API. As this is just a standard Javascript class library it can be used as part of almost any other web technology you care to mention, including ASP.NET.A Factor class is provided to create all of the other Gears objects. A Database class provides access to a SQLite database system. This acts as a persistent store for any application data stored locally on the client. Data is stored and retrieved using SQL statements. When Gears first creates or uses storage on the client machine it displays a dialog box asking it the user trusts the website that the web page belongs to. The settings can be made to persist so that the user has to opt in just once for each site using Gears. Further security is enforced to make sure that the objects can only open files that were created by the site. The Database class simply provides local storage, but the LocalServer class makes a connection between client and server storage. Essentially it can be set to cache any server HTTP resource and deliver it from the client if it is more efficient, or if the server isn’t available. A script can also manually select to use the cached or on-line resource if both are available. Resources can either be downloaded on an ad-hoc basis under script control or in a managed fashion as specified in a manifest file. The cached resources can be kept synchronised with the server using a range of automatic and manual methods. To help with the problem of asynchronous update there is also a WorkerPool class which can create what look like individual threads to keep the UI responsive. All resources are stored in a SQLite database. So what does Gears actually give you? It clearly provides a very quick and easy way of making a site available off-line. All you have to do is create a manifest that lists all of the files that are needed to make your web application work and they are automatically cached. However beyond this it is clear that Gears can be used to allow the user to interact with local documents, modify them and then synchronise them with a server in the background. What Gears doesn’t do is to supply the ability to arbitrarily create documents – you can only create SQLite databases – and it doesn’t allow access to client resources, even existing SQLite databases, that it hasn’t created. You can only open databases created by Gears that correspond to the site trying to open them. There is some suggestion that this might be relaxed in future versions, but at the moment it is a serious restriction. There are almost certainly ways around both of these limitations, but the question of whether or not Gears is a sufficient solution remains to be answered. |

Comments